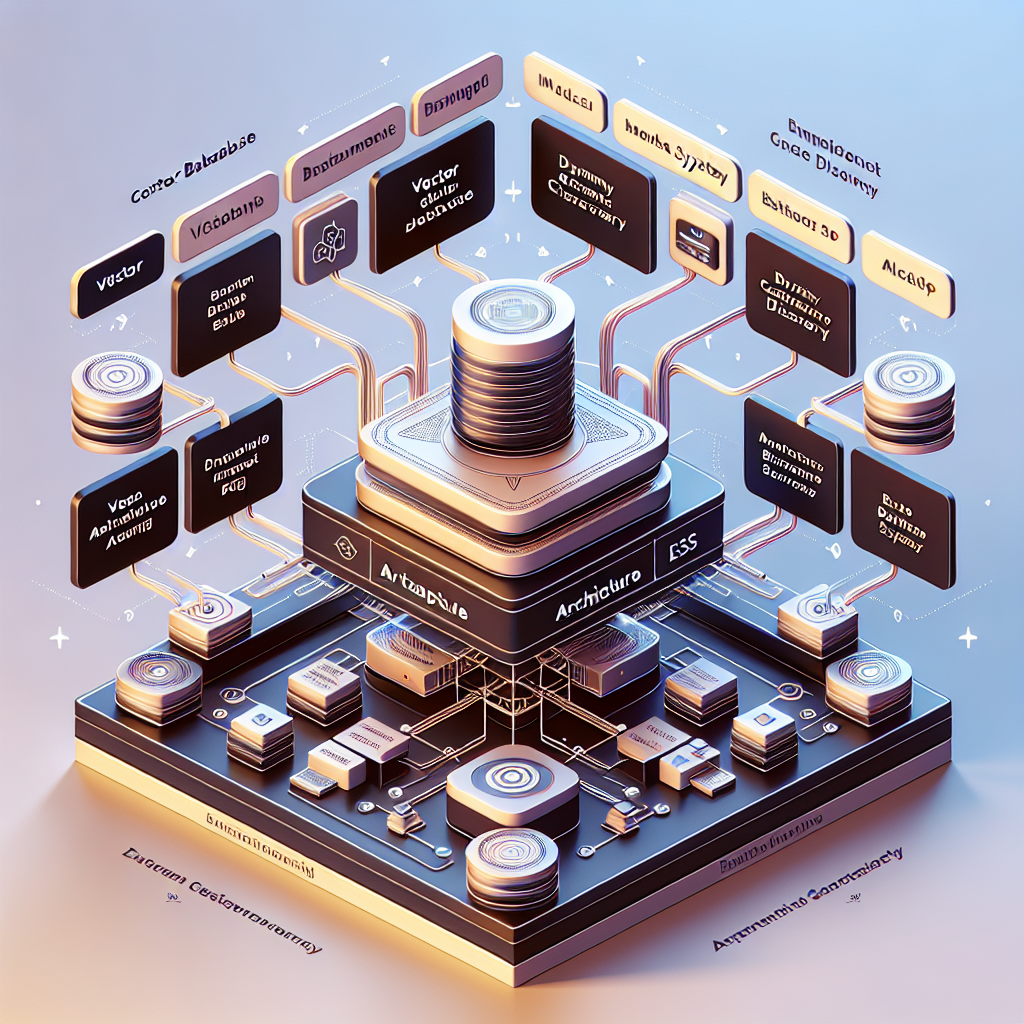

In the evolving landscape of artificial intelligence, modern applications necessitate a robust architecture that goes beyond conventional Large Language Models (LLMs). The integration of various components such as Vector Databases, metadata filters, RAG services, and Dynamic Context Discovery (DCD) mechanisms has emerged as essential for crafting scalable and effective AI solutions. This cutting-edge architecture enables systems to retrieve critical domain-specific knowledge efficiently while maintaining accuracy and relevance with live enterprise data.

The discussion surrounding AI architecture focuses heavily on solving several intrinsic problems. Traditional LLMs are often criticized for their tendency to hallucinate or generate inaccurate information. They also struggle with accessing private enterprise knowledge, which is crucial for rendering precise responses in a business context. By seamlessly embedding a Vector Database into the architecture together with RAG services, AI applications enhance their capability to pull up relevant, context-rich information right before generating user-facing answers. Moreover, leveraging metadata not only hones in on the accuracy of the results (through filters such as department or date) but also ensures that organizations have the necessary governance over the data.

Consider the specific problems this architecture addresses:

- Reducing the occurrence of hallucinations through grounding answers in reliable documents.

- Facilitating domain-specific responses powered by enterprise knowledge.

- Employing metadata constraints to filter outputs effectively.

- Managing vast document repositories with ease and efficiency.

- Ensuring resilience and continuity through robust backup mechanisms.

- Dynamically optimizing context selection using DCD tailored to user intent.

- Upholding compliance and access control across the system.

These factors collectively forge a pathway for overcoming the hurdles that AI applications frequently encounter, making them more scalable and contextually relevant.

In terms of implementation, executing this sophisticated architecture is systematic yet crucial for achieving the desired outcomes. Initially, the process begins with document ingestion, where diverse formats (like PDFs or databases) are uploaded into the system. Following ingestion, the documents are chunked and converted into embeddings—numerical vector representations designed to encapsulate the essence of the text or images for enhanced processing.

These embeddings, accompanied by structured metadata attributes, are then securely stored in a Vector Database. This step is pivotal as it serves as the backbone for subsequent retrieval processes. Implementing a RAG service is essential, enabling the system to execute a top-k retrieval of relevant chunks based on a similarity search, effectively filtering through the metadata. This combination ensures that the context presented to the LLM resonates well with user inquiries, creating a more human-like interaction.

Dynamic Context Discovery plays a central role in adapting the retrieval strategies according to the nuanced understanding of user intent. By analyzing and evaluating the query at hand, DCD enhances the truthfulness and relevance of responses by choosing the most applicable data chunks to inform the LLM’s generation process.

Finally, to guarantee that the system remains resilient against unforeseen challenges, integrated backup mechanisms are employed. These stratagems involve periodic snapshots of both vector and metadata installations, enabling data recovery and reliability without compromising speed and efficiency.

The overarching architecture is typically deployed in a scalable cloud-native environment, allowing businesses to capitalize on the flexibility of cloud services. Companies can maximize this integrated system by ensuring that their AI applications not only serve immediate operational needs but continuously adapt to the evolving dynamics of their specific industries.

In conclusion, the synergy among Vector Databases, metadata, RAG services, and Dynamic Context Discovery represents a significant advancement in the construction of AI architectures. By addressing common challenges and focusing on domain-specific requirements, these systems promise to enhance performance and reliability, guiding enterprises towards more intelligent and insightful decision-making.

Leave a Reply