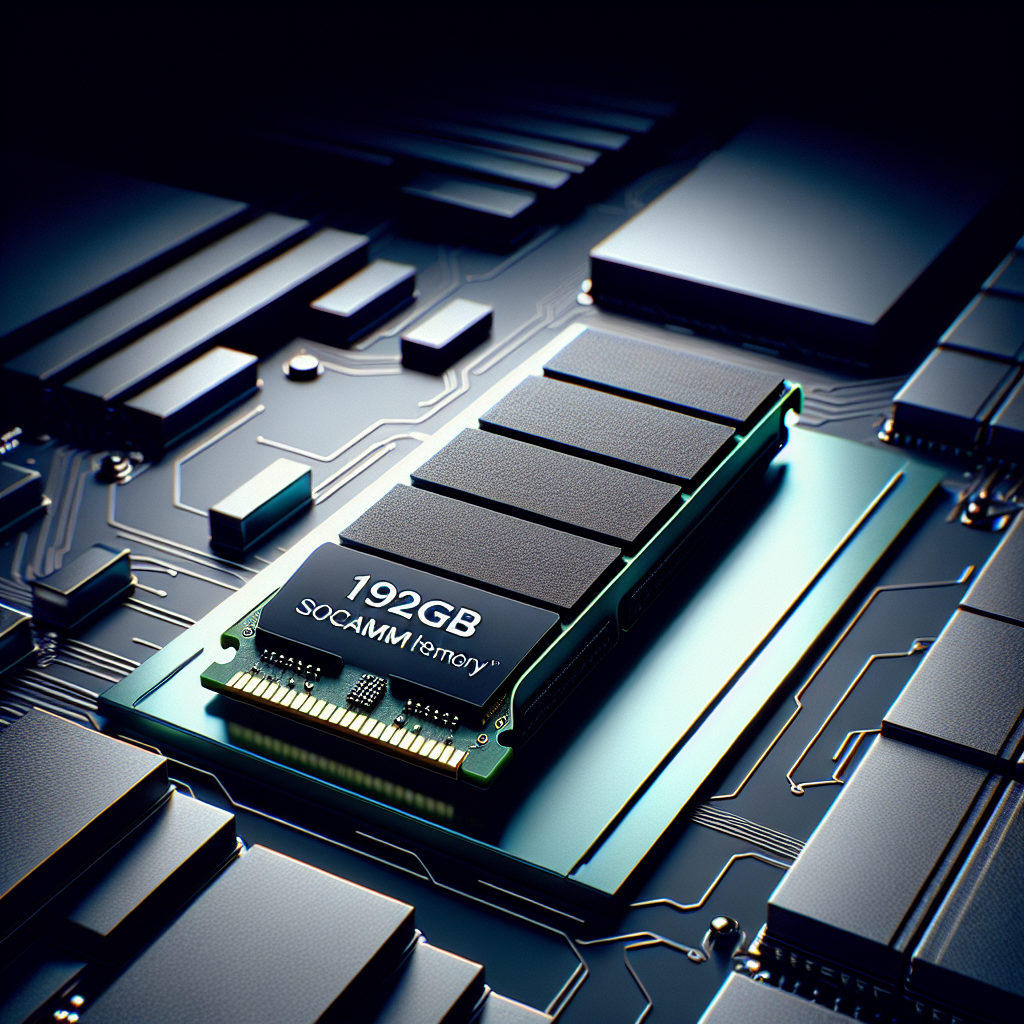

In an impressive demonstration of innovation in the memory storage space, Micron Technology has announced the sampling of its latest high-capacity module, the 192GB SOCAMM2. This new product aims to address the burgeoning demands of artificial intelligence (AI) servers, which require increased memory capacity to handle complex workloads efficiently. The introduction of the SOCAMM2 memory module marks a significant milestone, as Micron claims it holds the title of the highest-capacity SOCAMM2 module available globally.

Micron’s announcement comes at a time when the AI sector is experiencing exponential growth, necessitating enhancements in data center capabilities. As AI workloads escalate, the balance between energy efficiency and capacity becomes ever more critical. Raj Narasimhan, senior vice president and general manager of Micron’s Cloud Memory Business Unit, underscored this perspective, emphasizing that the requirement for data center servers to maximize efficiency is paramount. The SOCAMM2 aims to deliver superior data throughput while minimizing power consumption, enabling the next generation of AI data centers.

The specifications of the 192GB SOCAMM2 are noteworthy. Compared to its predecessor, the first-generation LPDRAM SOCAMM, Micron’s latest offering boasts a remarkable 50% increase in capacity without expanding its physical footprint. This compact design significantly reduces the time to first token (TTFT) for AI real-time inference workloads by over 80%, which is a crucial enhancement for performance-sensitive applications. Furthermore, the module showcases a 20% improvement in power efficiency, further solidifying its appeal in the energy-conscious landscape of modern data centers.

At scale, the implications of this power efficiency are profound. Full-rack AI installations are now leveraging more than 40 terabytes (TB) of CPU-attached lower-power DRAM main memory, and transitioning to Micron’s 192GB SOCAMM2 could yield substantial power savings across large deployments. The module’s low-power capabilities are especially vital as data center operators seek to curb energy costs while maintaining high-performance standards.

Micron’s technological advancements in the SOCAMM2 stem from the low-power DRAM technologies originally designed for mobile devices. This transition necessitated specialized design features and enhanced testing protocols to ensure that the memory modules could stand up to the rigorous demands of data centers. Micron asserts that its expertise in low-power DRAM underpins the functionality of the SOCAMM2, marking a significant upgrade over traditional RDIMMs.

According to Micron, comparing performance figures reveals that SOCAMM2 modules have managed to enhance power efficiency by more than two-thirds, while simultaneously packing their performance into a module one-third the size of conventional offerings. This compact design not only optimizes data center footprint but also boosts overall capacity and bandwidth, essential for data centers dealing with large-scale AI tasks. Additionally, the modular design and innovative stacking technology facilitate improved serviceability, enabling the design of liquid-cooled servers that respondent to the temperature challenges posed by high-performance AI computing.

In conclusion, Micron’s launch of the 192GB SOCAMM2 memory module signifies a critical advancement in memory technology for AI applications. This product not only meets the increased demands for memory within the data center sector but also offers substantial improvements in power efficiency and processing speed. As AI continues to permeate various industries, the scaling of memory solutions like Micron’s SOCAMM2 will become vital in supporting the infrastructure necessary for future advancements in artificial intelligence. The implications for business leaders and investors are clear; those who adopt such innovative memory technologies will undoubtedly position themselves ahead in the competitive landscape of AI-driven markets.

Leave a Reply